Sequential Learning for Dance generation

Generating dance using deep learning techniques.

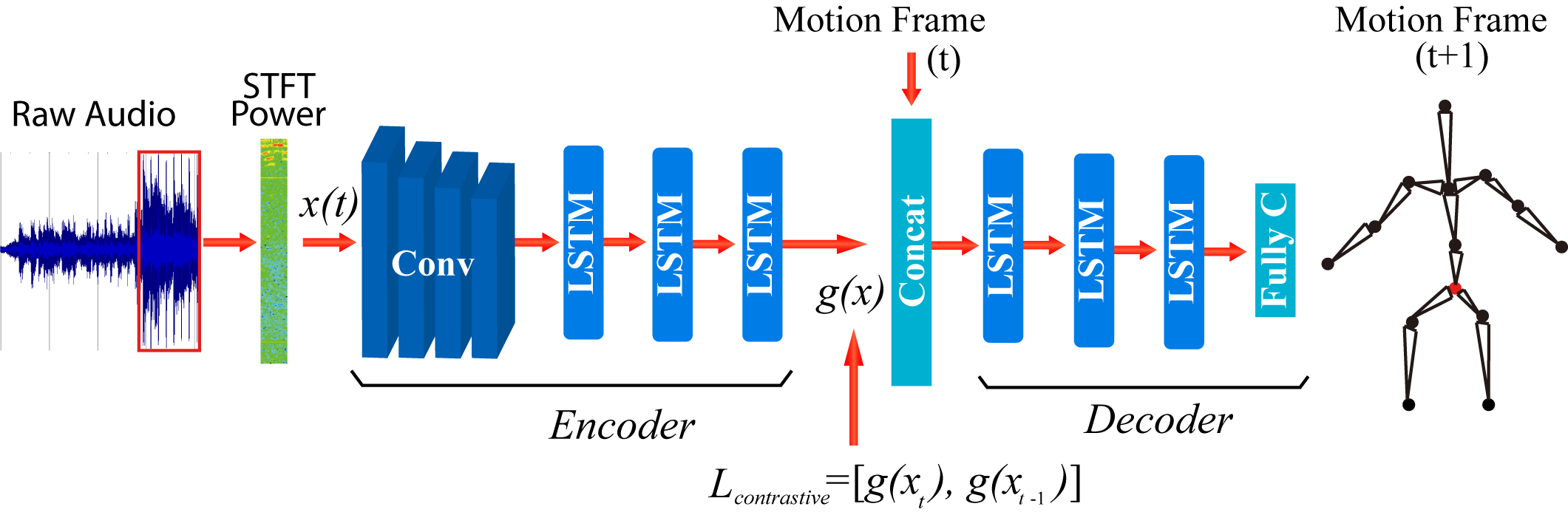

The proposed model is shown in the following image:

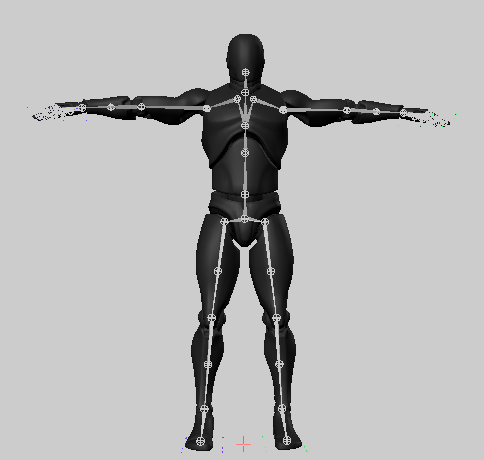

The joints of the skeleton employed in the experiment are shown in the following image:

Use of GPU

If you use GPU in your experiment, set --gpu option in run.sh appropriately, e.g.,

$ ./run.sh --gpu 0

Default setup uses GPU 0 (--gpu 0). For CPU execution set gpu to -1

Execution

The main routine is executed by:

$ ./run.sh --net $net --exp $exp --sequence $sequence --epoch $epochs --stage $stage

Being possible to train different type of datasets ($exp)

To run into a docker container use the file (run_in_docker.sh) instead of (run.sh)

Unreal Engine 4 Visualization

For demostration from evaluation files or for testing training files use (local/ue4_send_osc.py).

For realtime emulation execute (run_realtime.sh).

Requirements

For training and evaluating the following python libraries are required:

- chainer=>3.1.0

- chainerui

- cupy=>2.1.0

- madmom

- Beat Tracking Evaluation toolbox. The original code is found here

- mir_eval

- transforms3d

- h5py, numpy, soundfile, scipy, scikit-learn, pandas

Install the following music libraries to convert the audio files:

$ sudo apt-get install libsox-fmt-mp3

Additionally, you may require Marsyas to extract the bet reference information.

For real-time emulation:

- pyOSC (for python v2)

- python-osc (for python v3)

- vlc (optional)

ToDo:

- New dataset

- Detailed audio information

- Virtual environment release

Acknowledgement

- Thanks Johnson Lai for the comments

References

[1] Nelson Yalta, Shinji Watanabe, Kazuhiro Nakadai, Tetsuya Ogata, “Weakly Supervised Deep Recurrent Neural Networks for Basic Dance Step Generation”, arXiv

[2] Nelson Yalta, Kazuhiro Nakadai, Tetsuya Ogata, “Sequential Deep Learning for Dancing Motion Generation”, SIG-Challenge 2016